Machine Learning in Rust, Logistic Regression

- 18 minsForeword: this is the second part of a three parts series. If you haven’t yet gotten the chance to read the first part of this series, I recommend pausing here to take a few minutes and catch up before moving ahead.

In the second part of the series, I will show you how to implement another popular machine learning algorithm, Logistic Regression in Rust. Similar to my previous blog post dedicated to Machine Learning in Rust, I will implement this statistical model from scratch. While doing so, I will present some additional Rust libraries that you can use to build a wide variety of core machine learning algorithms.

What is Logistic Regression?

Logistic regression is a classification algorithm. It is used to predict a binary or multi-class outcome based on a set of independent variables.

Wait, so why is it called regression? Shouldn’t the word “regression” be reserved to algorithms dealing with continuous dependent variable?

As Frank Harrell pointed out in his blog, logistic regression is emphatically not a classification algorithm on its own. It is only a classification algorithm in combination with a decision rule that turns a continuous function into the predicted probabilities of the outcome. Logistic regression is a regression model because it estimates the probability of class membership as a (transformation of a) multilinear function of the independent variables.

So how do we turn a regressor into a classifier?

For example, let’s say we have a datasets that contains credit card transactions. Each transaction comes with a label that tells us whether it was fraudulent or not. We want to use this dataset to train a classifier that will be able to recognize fraudulent credit card transactions. If we assume there is a linear relationship between independent (credit card data) and dependent variables (transaction class), and use linear regression formula to model this relationship we will get a quantitative response, \(y\).

\[ \begin{equation} \boldsymbol{y} = \boldsymbol{X}\beta \end{equation} \]

The problem with this model is that our prediction is unbounded on both ends. This function can produce a very large negative, as well as a very large positive number for a some input \(X\). What we want instead is to properly model a probability, \(p(X)\) that a transaction is fraudulent using a function that gives outputs between 0 and 1 for all values of \(X\). One way to do it would be to transform the output \(y\) using the Sigmoid function to return a value between 0 and 1.

\[ \begin{equation} p(X) = \frac{1}{1 + e^{-\boldsymbol{X}\beta}} \end{equation} \]

The plot of the Sigmoid function look like this

This function takes a continuous value and transforms it into a new value between 0 and 1. Now, to predict transaction class using our model, all we need is to choose a threshold value, above which the transaction is to be considered fraudulent. A sensible starting value could be 0.5 but you might decide to bring the threshold higher if you don’t want your model to trigger too many false alerts.

Great! Can I train my model using the approach described in the first part of this series?

Absolutely not! Now that equation describing our model includes a nonlinear function we cannot find model’s parameters using linear algebra. Instead, we will have to use one of many methods provided by optimization theory to find parameters of this model.

Implementing Logistic Regression in Rust

Before implementing logistic regression, let’s see how one would go about fitting the model to the credit card dataset in Python. The first 5 rows of the dataset looks like this

1

2

3

4

5

import pandas as pd

import numpy as np

credit = pd.read_csv('creditcard.csv', header=None, names=list(range(1, 31)) + ['Class'])

credit.head(5)

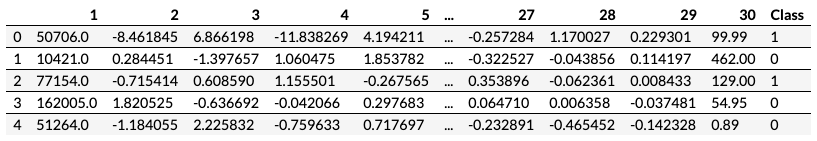

We have 30 predictors and one binary target variable that represents a transaction class. As in the first part of the series we separate predictors from target and split this dataset into test and training portions.

1

2

3

4

5

from sklearn.model_selection import train_test_split

X = credit.drop('Class', axis=1).values

y = credit.Class.values

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size = 0.2)

Let’s fit logistic regression to the training data and measure model’s performance on the test set. Since our target is categorical we will have to use different function to measure model performance. The metric that we will use is called area under ROC curve and it gives us a number between 0.5 and 1. Values close to one means our classifier does a great job of separating instances that belong to different classes.

1

2

3

4

5

6

from sklearn.linear_model import LogisticRegression

from sklearn.metrics import roc_auc_score

clf = LogisticRegression(random_state=0).fit(X_train, y_train)

y_hat = clf.predict(X_test)

roc_auc_score(y_test, y_hat)

0.9406542056074766Our AUC metric is .94. Great! Now let’s see how we can fit Logistic Regression to the same data in Rust. We will use smartcore, nalgebra and one new library I haven’t mentioned yet, argmin.

1

2

3

4

[dependencies]

smartcore = { version = "0.2.0", features=["nalgebra-bindings", "datasets"]}

nalgebra = { version="0.23.0", features = ["serde-serialize"]}

argmin = "0.3.1"

We load the dataset and split it into test and training sets, just like we did before. In case you are wondering where the function parse_csv comes from, it is defined in the first part of the series. The function train_test_split is defined in the smartcore framework.

1

2

3

4

5

6

7

let file = File::open("creditcard.csv").unwrap();

let credit: DMatrix<f64> = parse_csv(BufReader::new(file)).unwrap();

let x = credit.columns(0, 30).into_owned();

let y = credit.column(30).into_owned();

let (x_train, x_test, y_train, y_test) = train_test_split(&x, &y.transpose(), 0.2, true);

We also need to define the Sigmoid function.

1

2

3

4

5

6

7

8

9

fn sigmoid(v: f64) -> f64 {

if v < -40.0 {

0.0

} else if v > 40.0 {

1.0

} else {

1.0 / (1.0 + f64::exp(-v))

}

}

To predict a class of a new transaction we need to multiply transaction attributes, \(X\) by model parameters \(\beta\) and use sigmoid function to transform quantitative value into a probability estimate, \(p(X)\) . Once we have \(p(X)\) can apply our threshold to decide whether the transaction is fraudulent or not.

1

2

3

4

5

6

7

8

9

10

11

fn predict(x: &DMatrix<f64>, coeff: &DVector<f64>, intercept: f64) -> DVector<f64> {

let mut y_hat = (x * coeff).add_scalar(intercept);

y_hat.apply(|v| {

if sigmoid(v) > 0.5 {

1.0

} else {

0.0

}

});

y_hat.into_owned()

}

The only question that has not yet been resolved is how to estimate parameters of logistic regression, \(\beta\)? This is where optimization theory comes into play. To find estimates of \(\beta\) we need two things:

- A loss function that takes model parameters, \(\beta\) and produces a small value when these parameters are optimal and a large value otherwise.

- A framework that will help us to drive loss function to its minimum value. When we found global minimum of the lost function we know we found best values for parameters of the logistic regression.

As you might have noticed a lot depends on quality of the loss function. I won’t go into specifics of how to choose the proper loss function because this topic is outside the scope of this article. Also, logistic regression comes with a standard loss function that you can easily find in the web. The variant of the loss function that we are going to use here is defined as:

\[J(\beta) = -\frac{1}{n}\sum_{i=1}^n[y_i log(\sigma({X}\beta)) + (1- y_i)log(1 - \sigma({X}\beta))]\]

where \(\sigma(x)\) is our Sigmoid function. Loss function’s derivative is defined as

\[\frac{\partial J(\beta)}{\partial \beta} = \frac{1}{n} X^T(\sigma(X\beta) - y)\]

The best way to understand any function is to see it in action. Let’s say an optimizer found a model that predicts a large number, say 10.0 for some transaction. At the same time, since we have true labels, we know that this transaction is fraudulent, hence \(p(X)\) should be 1.0. When we apply Sigmoid function to the output of our model, 10.0 we get a value that is close to 1.0. When we plug both, estimated probability and true probability into our loss function we get a very small loss. This loss tells our optimizer that it found parameter values that are not far from optimal.

1

2

3

4

// y_true = 1.0, y_hat -> +inf

let y = 1.0;

let y_hat = 10.0;

y * sigmoid(y_hat).ln() + (1.0 - y) * (1.0 - sigmoid(y_hat)).ln()

-0.00004539889921682063On the other hand, when we get probability estimate that is far from the true value our loss will be huge and this difference will force optimizer to look for best parameters in some other direction.

1

2

3

4

// y_true = 1.0, y_hat -> -inf

let y = 1.0;

let y_hat = -10.0;

y * sigmoid(y_hat).ln() + (1.0 - y) * (1.0 - sigmoid(y_hat)).ln()

-10.000045398899218One good way to think about optimizer is to imagine a search function where you look for a solution that satisfies some conditions. The way you search depends on your optimization method. And what you search for depends on your loss function. Loss function defines not only your target but also tells your optimizer how far is it from the target. The search method is the algorithm that optimizer uses to adjust a search direction. Good optimization framework provides many optimization methods since no single method can be used to optimize all functions.

The good news is that argmin is just the right optimization framework we need, with an optimization method that can be used to find optimal parameters of the logistic regression. Let’s implement our loss funtion in Rust.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

use argmin::prelude::*;

use argmin::solver::linesearch::{ArmijoCondition, BacktrackingLineSearch};

use argmin::solver::quasinewton::LBFGS;

struct BinaryObjectiveFunction<'a> {

x: &'a DMatrix<f64>,

y: &'a DVector<f64>

}

impl<'a> ArgminOp for BinaryObjectiveFunction<'a> {

type Param = Vec<f64>;

type Output = f64;

type Hessian = Vec<Vec<f64>>;

type Jacobian = ();

type Float = f64;

// Our loss function is defined here

fn apply(&self, w: &Self::Param) -> Result<Self::Output, Error> {

let mut f = 0f64;

let (n, _) = self.x.shape();

for i in 0..n {

let wx = dot(w, &self.x, i);

f += self.y[i] * sigmoid(wx).ln() + (1.0 - self.y[i]) * (1.0 - sigmoid(wx)).ln();

}

Ok(-f)

}

// Gradient of the loss function

fn gradient(&self, w: &Self::Param) -> Result<Self::Param, Error> {

let (n, p) = self.x.shape();

let mut g = vec![0f64; w.len()];

for i in 0..n {

let wx = dot(w, &self.x, i);

let dyi = sigmoid(wx) - self.y[i];

for j in 0..p {

g[j] += dyi * self.x[(i, j)];

}

g[p] += dyi;

}

Ok(g)

}

}

Finally, let’s set up our optimization driver

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

fn optimize(x: &DMatrix<f64>, y: &DVector<f64>) -> Result<(DVector<f64>, f64), Error> {

let (_, p) = x.shape();

// Define cost function

let cost = BinaryObjectiveFunction { x, y };

// Define initial parameter vector

let init_param: Vec<f64> = vec![0f64; p + 1];

// Set condition

let cond = ArmijoCondition::new(0.5)?;

// set up a line search

let linesearch = BacktrackingLineSearch::new(cond).rho(0.9)?;

// Set up solver

let solver = LBFGS::new(linesearch, 7);

// Run solver

let res = Executor::new(cost, solver, init_param)

// .add_observer(ArgminSlogLogger::term(), ObserverMode::Always)

.max_iters(100)

.run()?;

let w = DVector::from_row_slice(&res.state().best_param);

Ok((w.rows(0, 30).into_owned(), w[30]))

}

Everything is set for the launch! Let’s see how far are we from the AUC metric we’ve obtained in Python.

1

2

3

4

5

let (coeff, intercept) = optimize(&x_train, &y_train.transpose()).unwrap();

let y_hat = predict(&x_test, &coeff, intercept);

println!("{}", roc_auc_score(&y_test, &y_hat.transpose()));

The number I’ve got was 0.91, which is not far from the AUC we’ve got in Python. You might get a different value because every time you run this code you will get a different train/test split due to randomization. But the important point here is that performance of our algorithm is very similar to the performance of the top of the line Scikit Learn’s implementation.

Conclusion

Once again we’ve assembled a machine learning algorithm in Rust from scratch, using very little code and achieved performance that is on par with the implementation provided by one of the best machine learning frameworks available in Python.

We also learned a new technique that can be used to implement many other machine learning algorithms, including but not limited to Neural Network, SVM and Collaborative filtering.

In my next and final article dedicated to machine learning in Rust I will introduce you to a new framework that will make building statistical model in Rust a breeze. Stay in touch!

Sources

- “An Introduction to Statistical Learning”, James G., Witten D., Hastie T., Tibshirani R.

- “On the Limited Memory Method for Large Scale Optimization”, Nocedal et al., Mathematical Programming, 1989

- The Credit Card Fraud Detection dataset

- argmin: mathematical optimization in pure Rust

- nalgebra: linear algebra library for Rust.

- SmartCore: a comprehensive library for machine learning and numerical computing in Rust

- Source code